My friends know me to be skeptical of “studies” about software development techniques. The main reason for my skepticism is that such studies are rarely undertaken by people who have any understanding of what they are studying. They combine data points from different sets of observations as a way to try and accumulate sufficient information to make charts and trend lines, but often the data points aren’t consistent enough for the aggregated data to be meaningful. I wonder whether many studies of programming techniques are based on enough observations to be meaningful, and whether the researchers’ analysis of the results is based on any direct knowledge of the subject at hand.

There are notable exceptions to this, of course – like this one by Alistair Cockburn and Laurie Williams – but on the whole studies in this area seem to be poorly done. There seems to be a fascination with statistical analysis these days, to such an extent that researchers are more interested in the formulae and charts than in the meaning of the information.

I shared an example of the problem with researchers vs. practitioners in a 2012 blog post, All evidence is anecdotal. The study concerned the value of pair programming. I met one of the authors at the conference where she presented the findings, and asked about the study. It turned out a group of graduate students did the study as a university project to learn techniques for conducting studies. They weren’t really studying pair programming; they were learning how to conduct a study, write a paper, and present the results in a talk. That’s all good, but it says nothing about the effectiveness of pair programming. Even if they had been skilled researchers with a background in programming, the study would have been questionable, as it was based on very few observations.

An anecdote is (just) a story

If we can’t rely on studies to provide useful information about programming techniques, then what can we rely on? People disparage experience – a phenomenon that puzzled me in 2012 and still puzzles me today – and dismiss it as mere “anecdotal” evidence. They use the phrase “anecdotal evidence” as if it literally meant “unreliable information.” Is that the case?

One website defines anecdotal evidence as follows:

“As opposed to scientific evidence, anecdotal evidence is evidence, information, or a conclusion, that is based on anecdotes. However, in this case, anecdotes have a broader meaning than just stories―anecdotal evidence is evidence based upon the experiences or opinions of other people, or the media.”

So, an anecdote is a story, basically. It’s easy to disparage “anecdotal evidence” about programming techniques by dismissing it as “just stories.”

But are experience reports from respected practitioners really “just stories,” no better-informed than a “story” about programming from your neighborhood barista? There are anecdotes and there are anecdotes.

Deconstructionism and anecdotes

There was a trend in literary studies some years ago known as deconstructionism. Some proponents describe it like this:

“There’s a reason why wealth and education are associated with ‘upper class.’ Or how about being in the ‘center’ vs. the ‘margin?’ Which word is generally considered more desirable? […] Without even thinking about it, we tend to favor one term out of the pair. These unequal pairs, or binaries, are what deconstructionists would call violent hierarchies. In other words, they are forced situations in which one term, or person that term applies to, is automatically downgraded without any real reason. […] Deconstructionists don’t want to just reverse hierarchies. They want to blow up binary thinking altogether. So instead of thinking in terms of black and white, we have to consider infinite shades of gray along with every other color in existence. This way of thinking makes literature and culture more inclusive. Suddenly, no color is superior to any other.”

That sounds really positive. But in my anecdotal experience, deconstructionists tended to go beyond the point of diminishing returns in their attempts to scrub away all meaning from words. One professor at my university even went so far as to say there was no qualitative difference between the works of William Shakespeare and graffiti scratched into the wall of a public toilet stall. Both were merely “text” with no inherent meaning or value.

Maybe reducing Shakespeare to the level of toilet stall graffiti isn’t the only way to correct the cultural baggage of language. Maybe instead we could elevate writers like, for instance, Chinhua Achebe, to the status they deserve. Another approach, anyway.

What’s the connection? Those who seek to scrub away all meaning from the “mere anecdotes” of highly-skilled and experienced practitioners of software development seem to be following in the footsteps of deconstructionists who took the idea beyond the point of diminishing returns.

Maybe reducing top programmers to the level of hacks isn’t the only way to balance experience-based information and research-based information. Maybe instead we could recognize that some people’s “stories” are a little more meaningful than others’, in a given context. Another approach, anyway.

Show me a study

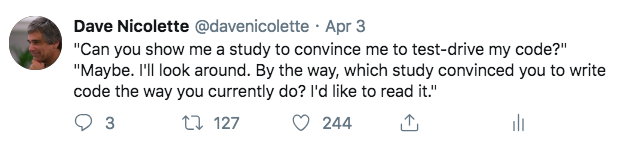

People are reluctant to trust the advice of technical coaches, consultants, and trainers. When they suggest developers try an unfamiliar technique, the response is often, “Show me a study.” Yet, those individuals never consulted any studies when they were deciding to adopt the development practices they currently use. When I tweeted about this, the tweet started to accumulate “likes” and “retweets” rapidly. That suggests to me I’m not the only one to notice the phenomenon.

It might be more informative to ask, “What were the conditions surrounding your project, that may have contributed to pair programming (or any other technique) helping or hurting the results?” From that, we could learn a little bit about the necessary conditions for a given technique to add value, and about situations where the technique may not be advisable. That’s the kind of information we can apply in a practical way.

But it’s rare for anyone to ask that sort of follow-up question, and rarer still for the person sharing the anecdote to have the slightest idea how to answer. The technique either “worked” or “didn’t work.” Why? Apparently, because the technique itself just “works” or “doesn’t work,” no matter who does it, how well they do it, what sort of work they’re doing, or under what constraints they’re operating.

And how can we prove the technique works? By statistical analyses that wash out all information about the context in which the particular technique was used in the situations that were studied, leaving us with useless conclusions, like “on 47% of projects, technique X was shown to improve results by 11%.”

Okay. How does that relate to my current work situation? Or anyone’s? Is our situation consistent with the 47% or not? No way to tell. Unless, maybe, you know someone who was on one of those projects, and they have a story to share.

An anecdote is a story

Parisians have a reputation among Americans for rudeness. When my wife and I visited Paris a few years ago, we found Parisians to be very nice. They went out of their way to try and understand, even though we could barely croak out enough French to apologize for our bad French. They stopped whatever they were doing to help us. Courtesy and friendliness were universal in all parts of the city and among all sorts of people.

That was an anecdote. It reflects our experience in Paris. Does it negate all the other anecdotes from American tourists who report rude behavior? Of course not. By the same token, do those anecdotes mean Parisians really are rude? No. More likely it means most American tourists come across in a way that raises other people’s defenses. Remember the Ugly American stereotype? It isn’t made up out of thin air. In my anecdotal experience, I’ve seen the stereotype in action numerous times.

On Project A for Client B in year 20xx, we introduced test-driven development (TDD) as a way to address chronic problems in code quality. We found it effective. After the team became reasonably proficient with it, measures of code quality showed improvement.

That was an anecdote. It reflects my experience with Project A for Client B in year 20xx. Does it negate all the other anecdotes from programmers who have had negative experiences with TDD? Of course not. By the same token, do those anecdotes mean TDD really doesn’t help with code quality? No. More likely it means most programmers are unfamiliar with the technique. Remember the reputation for low quality that software has, generally? That reputation wasn’t made up out of thin air. In my anecdotal experience, I’ve seen plenty of low-quality software.

So what?

If you consult a study, be sure and check whether it was conducted in accordance with generally-accepted good practices for conducting studies. Beyond that, check whether the researchers have a background in the subject of study, suggesting they understand not only the number of observations but also the implications of those observations. And if you wish to use a study as justification to use or try an unfamiliar technique, pay attention to the context and constraints that applied in the situations covered by the study, and compare them to your own. Don’t just grab a number off the results page, like “47%”, and take it as the signal to go forward or not go forward with the new technique.

If you read and hear anecdotes from other practitioners, be sure and consider the level of experience and skill of the people telling the stories. Beyond that, pay attention to whether they can explain the parameters of the situations they are describing in a way that provides some context for whether the reported result might apply in your own situation. If they just say “it worked!” or “it didn’t work!” I have to ask whether that’s sufficient information. Some “stories” are better than others.