Can contemporary lightweight software development and delivery methods “scale” in large enterprises without appropriate support for the venerable IBM mainframe platform? Thought leaders in the “agile” community seem to think so. For example, this proposed session on code isolation and automated testing for mainframe applications received almost no notice from the review team for the Agile 2015 conference. The two reviewers who noticed the submission seemed not to understand what it was about, or to see any relevance or usefulness in it.

It’s true that some large enterprises don’t have mainframe technology. Apple, Google, Amazon, and a handful of others built their businesses in an era when alternatives to the mainframe were plentiful. Those companies tend to be relatively uninterested in “agile” and “lean” methods, as they have come up with their own ways of doing things, and their financial position doesn’t lead them to believe they need to change. They’re probably right. If they were “dysfunctional” they probably wouldn’t be doing quite so well financially…a minor detail often overlooked by enthusiastic change agents.

However, the majority of larger enterprises have been around for decades. They have used computer technology all those years. The general pattern has been to layer new technologies on top of old rather than to migrate applications. Name a large bank or insurance firm that doesn’t have a significant investment in mainframe systems. Now explain how you would bring agility to those organizations just by setting up a few Scrum teams, applying good technical practices only to the outermost layer of their technology stack, and ignoring technical practices and tooling on the mainframe platform.

What’s the problem?

The past few years people have been paying a lot of attention to the idea of “scaling” contemporary software development and delivery methods to large enterprises. The Agile Alliance has invested considerable time and effort to develop practical approaches to scaling “agile” methods. The Scrum Alliance also has materials on scaling, and Scrum.org has crafted a framework for scaling Scrum called Nexus. The Scaled Agile Framework (SAFe), Large Scale Scrum (LeSS), and Disciplined Agile Delivery (DAD) all offer guidance in applying “agile” methods at scale. Commercial software firms are providing process frameworks for scaling lightweight development methods as well, including Microsoft, Oracle, Hewlett Packard, and others. Project management software firms have built support for “agile” and “lean” methods into their products, including Rally, VersionOne, and many more.

All these efforts address two of three key areas: Organizational structure and process. There’s plenty of advice about how to structure teams aligned with value streams and how to manage lightweight delivery processes. Once in a while someone remembers the third key area and exclaims, “Don’t forget technical practices!” Oh yeah, that. Then the company hires technical mentors to show the shiny new Scrum teams how to do things like test-driven development (well, in object-oriented languages, anyway) and continuous integration (well, on Linux and Windows, anyway). Then there’s that other stuff over there in the big room with the raised floor. But we needn’t worry about that; it’s Jurassic Park, and it’s sure to go away any day now. We can spin up a Rails or Angular app to replace all that stuff in five minutes. We’ll be done in time for lunch!

So, we’ve been waiting for the mainframe to die since around 1985 or so. But it hasn’t complied. Instead, it just keeps on evolving. Current versions of the zSeries can run Java better than any other platform. But that isn’t really the issue for bringing contemporary good practices to the mainframe, because companies aren’t doing all that much development in Java on the mainframe. Most employees in those companies aren’t even aware Unix System Services exists on the box. Their needs revolve around ongoing support for existing applications that were originally developed many years ago, written in procedural languages like Cobol, PL/I, Rexx, and even assembly language, running batch processing and what the oldtimers call “online processing” using the mysterious environment known as CICS. Many of those applications depend on data storage facilities quite unfamiliar to younger professionals and completely unsupported by contemporary development tools, like VSAM.

If we don’t provide appropriate tooling and environments to apply contemporary development methods to mainframe technologies, then we will never “scale” such methods to large enterprises. With the mainframe at the heart of operations, and contemporary methods applied only to the outer architectural layers of JavaScript, Java, and so forth, the goal of “scaling” remains unmet.

Often, those outer layer applications in JavaScript and Java or C# provide a pretty face to external customers, and pass messages to an API. They don’t penetrate the whole architecture. Actually, they don’t even perform much, if any, of the business logic for the transactions they wrap. They might call a “framework” that was built in the 2010s to wrap a RESTful API that was built in the 2000s to wrap a SOAP API that was built in the 1990s to wrap an EAI layer that was built in the 1980s to wrap an early attempt at a common interface layer that was built in the 1970s. Different layers in this stack interact with different applications in the environment depending on which layer happened to be the outermost interface at the time each of those applications was acquired or written. Transaction management under the covers is an order of magnitude more complicated than the business rules the systems support. Okay, so maybe we won’t be done in time for lunch. Maybe we should plan on a late dinner at the office and order pizza.

What’s needed?

Contemporary development practices are generally based on the idea of rapid feedback. Programmers want to be able to make a series of small changes to the code and quickly determine whether the code is (still) working and whether they have inadvertently broken any other part of the application. It isn’t really a “new” idea. In the Proceedings of the 1968 NATO conference on software engineering, computer science pioneer Alan Perlis wrote:

“A software system can best be designed if the testing is interlaced with the designing instead of being used after the design. […] Through successive repetitions of this process of interlaced testing and design the model ultimately becomes the software system itself.”

For reasons that are unclear to me, a number of years passed before the software development community took this advice to heart, but once that happened the idea grew from the notion of “test whatever might break” to “test infected” to “test driven” to “always write a failing test before writing any production code.”

The idea is to support very rapid feedback from very small changes that are checked into an automated build and test process very frequently (where “frequently” means “every few minutes,” not “every few weeks”). To achieve this sort of work flow in mainframe application development and maintenance, developers need to be able to do a good deal of their work off-platform – that is, on a laptop or workstation with no connectivity to an actual mainframe system, or indeed to any server.

That last point is the missing piece of the puzzle in tooling to support lightweight development methods for mainframe applications.

We need the ability to automate functional checks at multiple levels of abstraction. The next logical level above the unit level is sometimes called component testing. Above that, we might call out end-to-end functional testing, testing of system qualities (also called “non-functional requirements”), integration testing to ensure components can talk to each other properly, and behavioral testing that mimics the interaction of users with a UI. Contemporary development and delivery methods depend on the ability to automate most of the predictable, routine functional checks at all these levels.

The terminology for these different levels of automated testing is not standardized and many people use the same words to refer to different levels. One example is here: http://watirmelon.com/2011/06/10/yet-another-software-testing-pyramid/. Another, much simpler version, is here: http://martinfowler.com/bliki/TestPyramid.html. Some people call an end-to-end functional test an “integration test,” even though it is concerned with more than just the integration between two components. Some people consider a “unit” to be a whole program while others consider it to be a single method, function, or block of code. Despite the differences in terminology, the general idea is that there are numerous, small, fast test cases that cover “units” of code, with fewer and larger test cases as we progress up the “pyramid.”

What’s available?

Tools to support this mode of work (particularly at the unit level) started to appear toward the end of the 1980s. A notable example is SUnit, developed by Kent Beck to support development in Smalltalk. As Java gained prominence in the mid-1990s, SUnit was ported to Java as JUnit. Later, the same solution was ported to the Microsoft .NET platform as NUnit. The general design of SUnit became an architectural pattern for unit testing frameworks (although it isn’t the only pattern). Today, there are xUnit implementations for many languages. One of them is zUnit, from IBM, to support development for the mainframe platform.

IBM supplies zUnit as part of the Rational Developer suite of tools. It is a faithful implementation of the xUnit architecture. However, that doesn’t automatically make it a suitable unit testing framework for contemporary lightweight development practices. There are three issues.

First, the notion of a “unit” in zUnit is a load module. In other languages, a “unit” is generally considered to be a single method, function, or block. A load module on zOS is conceptually equivalent to an executable file on other platforms – an .exe or .dll file on Windows, or an executable or .so file on Unix or Linux. This is at the component level rather than the unit level. The conceptual equivalent of a function or method in Cobol is a paragraph, and in PL/I it’s a block. They are conceptually equivalent: They are not implemented in the same way as functions and methods in other languages. That is the reason zUnit doesn’t handle paragraphs and blocks individually.

Second, zUnit doesn’t give us the opportunity to work completely isolated on our laptop or workstation. The Rational Developer tools have to communicate with other servers – an RD&T (Rational Development and Test) server to emulate mainframe resources like CICS and VSAM, and (possibly) a Greenhat server to support service virtualization. We want our development workstation to be connected only to our version control system, which in turn is connected to our continuous integration server. Otherwise we want to be isolated from the universe.

Third, xUnit-based frameworks generally provide an intuitive DSL (domain specific language) for unit testing of object-oriented code. As zUnit is a faithful implementation of the xUnit archiecture, it force-fits an object-oriented testing DSL into a procedural world. Cobol and PL/I developers do not find the DSL intuitive. Several steps are required to get a single unit test case running. IBM helps the situation by providing “wizards” to generate the boilerplate code from Rational Developer, but it is still a bit tedious to create and maintain a suite of automated checks.

All these issues make it difficult to achieve the seamless, rapid feedback called for with contemporary lightweight development methods. Configuring the RD&T and Greenhat servers takes time, one or both may be unavailable at any given time for reasons unrelated to the developer’s testing needs, depending on service virtualization to achieve code isolation at the unit and component levels is costly, and the overall check-in, build, feedback loop may take minutes or even hours instead of seconds.

This may not seem like a serious problem to someone who is unaccustomed to the rapid feedback work flow, but any delay built into the toolset will cause developers to change more code between check-ins so that they won’t spend as much time waiting between builds. Once that happens, it tends to worsen – developers wait longer and longer between check-ins, creating more defects that take longer to diagnose and causing more merge conflicts that take longer to resolve.

The third issue – the mis-match between the object-oriented and procedural worlds – makes zUnit a bit hard to use, from the perspective of experienced Cobol and PL/I developers. In general, when people find a tool hard to use they tend to stop using it. When our goal is to scale good practices, that in itself would bring the entire “scaling” initiative to an abrupt halt.

At the functional, and integration levels, the tools provided by IBM and other vendors offer good support for automated checks. Service virtualization provides the appropriate level of code isolation for automated checks at those higher levels of abstraction, toward the top of the automated testing pyramid.

At the behavioral level, several tools are available to support testing through the UI. For instance, Cucumber can be used with appropriate gems to automate checks through an off-platform GUI or through a “green screen” interface – swinger for Java Swing, page-object for web interfaces, te3270 for green screen interfaces; and for .NET there’s specflow.

The tooling gap is at the lower levels of the automated testing pyramid – unit and component checks.

Filling the gap – unit testing

As unit tests must be able to look inside a compilation unit or run unit so they can exercise individual functions and methods, unit test cases are written in the same language as the application under test.

Automated unit testing for PL/I

In the interest of delivering bad news first: If you are a PL/I user then you’re on your own to build unit testing tools. As far as I know the best off-the-shelf option you have is zUnit, and that will not get you down to the level of isolated, individual blocks.

Automated unit testing for Cobol

The Open Source project cobol-unit-test supports isolated, automated testing of individual paragraphs in Cobol programs. It is at an early stage of development, but is usable. It works with batch and CICS source programs. You do not need any runtime environment other than your own laptop or workstation to run the unit tests. The tool itself is written in Cobol, so all you need is a good Cobol compiler for Windows, Linux or OS X, like Microfocus Cobol or GnuCOBOL.

Mainframe languages were not designed to allow tools (like test frameworks) to modify object code or to cause the code under test to branch around sections that are not of interest to a particular test case. That means we must modify the source code that will be tested to merge test code with it, and produce a copy of the program that includes the test code.

The cobol-unit-test framework is implemented as a precompiler that merges your test cases with the program under test to produce a test program. When you run the test program, it executes the individual test cases rather than running the program as a single unit. So you have a three-step process:

- Precompiler – merge the test code with the program under test

- Compile – compile the test program resulting from step 1

- Run – run the test program, which will run the individual test cases one by one rather than running the program from top to bottom

You express test cases using a DSL that is designed to be relatively intuitive for people who are accustomed to Cobol syntax. For example, you could write something like this:

TESTSUITE 'CONVERT COMMA-DELIMITED FILE TO FIXED FORMAT'

BEFORE-EACH

INITIALIZE WS-RESULTS-TABLE

END-BEFORE

TESTCASE 'IT CONVERTS TEXT FIELD 1 TO UPPER CASE'

MOVE 'something' TO TEXT-VALUE-1

PERFORM 2100-CONVERT-TEXT-FIELD-1

EXPECT TEXT-OUT-1 TO BE 'SOMETHING'

TESTCASE 'IT HANDLES FILE NOT FOUND GRACEFULLY'

MOCK

FILE INPUT-FILE

ON OPEN STATUS FILE-NOT-FOUND

END-MOCK

PERFORM 0100-OPEN-INPUT

EXPECT WS-INPUT-FILE-STATUS TO BE '35'

EXPECT WS-FRIENDLY-MESSAGE TO BE 'SORRY, COULDN''T FIND INPUT-FILE'

You can tell what the test case is trying to do without having to spend much time on analyzing the code. You can also get a general sense of the DSL, probably well enough to write another test case without having to take a training class or read a user manual. The tool has a few more features than the ones shown here. See the project wiki for details.

Automated unit testing for Rexx

Rexx is a scripting language, and is not commonly used for full-blown applications in the way that Cobol and PL/I are used. Thus, you may or may not have very much complicated mission-critical production Rexx code. But many people appreciate Rexx and they do use it for serious programming. It may be worthwhile to test-drive or at least unit test the more-complicated Rexx functions.

Like other mainframe languages, Rexx it isn’t designed to be modified on the fly. The Open Source project t.rexx takes the approach of concatenating unit test files with the actual Rexx script file to produce a runnable Rexx script that executes the individual test cases.

Here’s an example of the DSL for t.rexx unit tests:

/* test script to demonstrate the rexx unit test framework */

context('Checking the calc function')

check( 'Adding 3 and 4', expect( calc( 3, '+', 4 ), 'to be', 6 ))

check( 'Adding 5 and 2', expect( calc( 5, '+', 2 ), 'to be', 7 ))

check( 'Subtracting 3 from 10', expect( calc( 10, '-', 3 ), 'to be', 7 ))

check( 'Multiplying 15 and 2', expect( calc( 15, '*', 2 ), 'to be', 31 ))

check( 'Dividing 3 into 15', expect( calc( 15, '/', 3 ), 'not to be', 13 ))

Rexx is a cross-platform scripting language backed by a strong user community. That means you can find multiple implementations of Rexx for your favorite desktop or laptop operating system as well as a lot of useful advice for using the language effectively. It also means you need to pay attention to zOS compatibility when running unit tests off-platform. What runs on Linux or Windows may or may not run exactly the same on zOS.

Automated unit testing for Assembly language

There aren’t many practical ways to run S390 Assembly language off-platform. One way is to use the z390 Portable Mainframe Assembler and Emulator Project, an Open Source project that has been steadily evolving over the years thanks to the contributions of long-time mainframe assembly experts. Another option is to use the Hercules System/370, ESA/390, and zArchitecture Emulator project, another Open Source initiative to emulate mainframe functionality.

Current releases of IBM software products are not free for the asking, so Open Source emulators are limited to old releases that are in the public domain. This may or may not be an issue in your situation, depending on how old your Assembly language legacy applications are.

A more pertinent question may be to ask just how valuable it is to get automated unit tests around your Assembly language code. If you have mission-critical applications that are modified frequently, then the cost of creating an automated suite of unit tests may be lower than the value of the reduced risk of change. However, in many legacy environments the Assembly language code is very stable. The risk of change is low because the frequency of change is low.

If you do see value in it, then the good news is it’s actually pretty simple to write a unit testing framework in Assembly language. There’s a toy project on Github that works with the z390 Portable Mainframe Assembler project mentioned above. The z390-assembly-unit-test project provides a simple, but working proof-of-concept for automated unit tests of Assembly code.

It consists of a small set of macros that provide a unit testing DSL and consistent reporting of test results. Test cases look something like this:

T1 EQU *

TESTCASE 'It finds ''def'' in ''abcdefghi''',29

SETVAL PATTERN,=C'def',3

SETVAL STRING,=C'abcdefghi',9

SETVAL EXPECTED,=C'def',3

CALL REGEX,(PATTERN,STRING,RESULT),VL

CLC RESULT(3),EXPECTED

BE T1PASS

FAILED

B T2

T1PASS EQU *

PASSED

The example is checking that a routine named REGEX can apply a regular expression to the string ‘abcdefghi’ to locate the substring ‘def’. It’s a far cry from test code that looks like plain English, but it’s fairly intuitive to a person familiar with S390 Assembly language. You can use the toy project as a starting point or roll your own framework from scratch. Either way, you’ll probably have a test case running within an hour.

Filling the gap – component testing (batch)

Above the “unit” level, automated test suites usually run on the platform where the application normally runs (in a test or development environment, of course). So, I’m assuming your “component” tests run on the zOS platform.

Although IBM considers a whole load module to be a “unit” of code for purposes of zUnit, it would be more consistent with industry norms to think of a whole load module as a “component.” For a batch jobstream, any single step can be considered a “component.” Any time an EXEC card refers to an application program rather than a utility, the step may be a reasonable candidate for isolated automated testing.

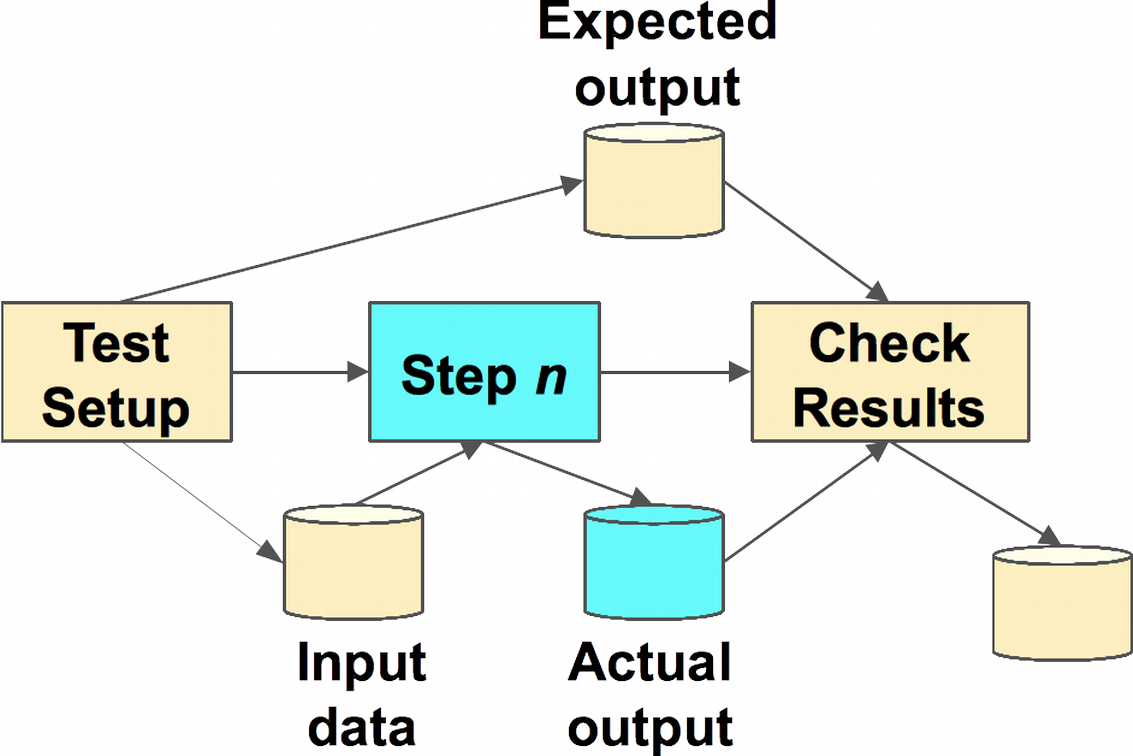

Fortunately, it’s quite easy to isolate individual steps in a batch job and test them separately from the whole jobstream. No additional tools beyond the software that comes with a zOS system are required. It’s just a question of setting up test input files, expected output files, JCL, and some sort of script or program to compare the expected and actual output and to create a test report.

So, each “test job” comprises a repeated series of three steps: a setup step, a test execution step, and an output comparison / reporting step. A test jobstream might include several isolated component tests for each step. Often, people wrap up this type of job with a step to concatenate all the test result reports into a single file. This file can be shipped back off-platform to a CI server where the test results are consolidated with those from component tests on other platforms. This provides a one-stop shop to find component-level test results for the entire application, covering all architectural layers. It also satisfies audit requirements by proving that all code changes were tested appropriately.

Although component tests normally run on-platform, depending on the mainframe resources your job step requires you may be able to run some or all your batch component tests off-platform. A colleague of mine, Glenn Waters, was determined to get a batch job running on Windows using GnuCOBOL, including its VSAM files. He was able to convert downloaded VSAM file exports into Berkeley DB format. The mainframe Cobol application programs ran nicely on Windows with no source code modifications, using GnuCOBOL. If you can run component-level tests off-platform as well as unit tests, it will be a big win for turnaround time to see test results during development.

Filling the gap – component testing (CICS)

In most shops, people forego component-level testing of CICS applications and rely on end-to-end testing of transactions through the UI. It’s possible to use service virtualization tools to set up isolated tests of selected groups of CICS programs, but using service virtualization at this level of testing can be tedious and error-prone.

A testing facility that made it easy to isolate individual CICS application programs and selectively mock EXEC CICS commands would be useful in shops that have a significant number of mission-critical CICS applications that are still under active maintenance. Unfortunately, there is no off-the-shelf testing facility of this kind.

It’s possible to roll your own testing facility for component-level testing of CICS applications. Generally, it needs the following capabilities:

- An intuitive DSL for writing test cases. This can be based on the DSL developed for cobol-unit-test, but would be even better if it were language-independent.

- A test driver to execute the test cases. This would be a straightforward CICS application program.

- A way to trap EXEC CICS commands issued by programs in the test region and mock out the commands that are specified in the currently-executing test case.

- A way to trigger a test run from outside the CICS environment. This makes it possible to include the component-level test suite for CICS to be included in automated test jobs/scripts.

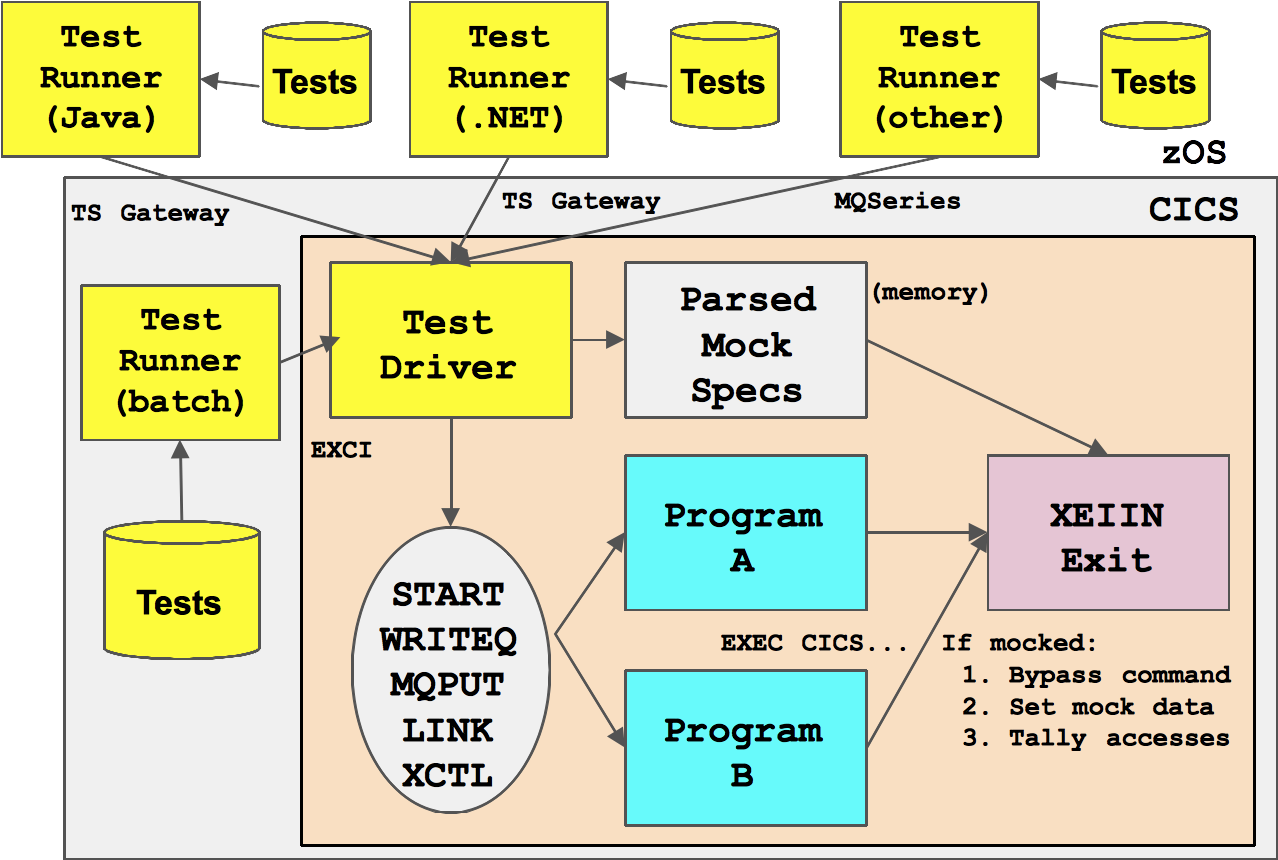

The diagram below suggests a possible implementation approach.

In the test CICS region, the Test Driver is the CICS application program that executes test suites. It knows how to parse the test DSL and provide the mock specifications to the XEIIN global exit. It also knows how to start the CICS applications under test using whatever means those applications are normally initiated. Metadata about the applications under test might have to be supplied somehow, such as a configuration file (not shown). This program can be written in any language that supports the CICS command-level interface.

The XEIIN global user exit receives control when a CICS program makes an EXEC CICS call. In this case, it will check whether the calling program is a CICS module and will not interfere with those calls. Then it will check whether the EXEC command is to be mocked. If so, it will supply the return values required by the test case and set the bypass bit to suppress execution of the command. This might include setting specified values in memory or raising a condition or forcing an abend.

This gives you a practical way to mock different EXEC CICS commands in each test case, allow a mixture of real and fake commands, and supply any return values, condition codes, and abend codes to the test program that each test case requires.

If your CICS environment is on zOS then you must write the exit program in assembly language. If your CICS environment is on AIX then you must write it in C.

A Test Runner is a program that initiates the Test Driver from a non-CICS environment where the automated test scripts are triggered across all platforms. How you set this up depends on how you configure your continuous delivery pipeline. Some possible implementations are suggested in the diagram, including

- A batch program on zOS that uses EXCI to start the Test Driver

- A Java program on Windows, Linux, or Unix that uses TS Gateway to start the Test Driver

- A .NET program on Windows that uses TS Gateway to start the Test Driver

- Any program on any platform that uses a put to an MQ Series queue to start the Test Driver

An agile approach to agile enablement

Many people are inclined to wait for “someone” to hand them a fully-functional, feature-rich testing tool that can be used in any enterprise environment worldwide. A more practical approach may be to build the tooling you require in your own environment.

Your test facility need not support the requirements of the company down the street or in the next city. It only needs the features necessary to support automated testing of your applications. You can build tools for yourself that meet your immediate requirements. It’s okay of the tools you build can only work with your applications. Add the features you need at the time the need arises. Don’t try to anticipate all future requirements and turn it into a monstrous development project.

Conclusion

To integrate the mainframe platform fully into an enterprise continuous delivery pipeline, and to enable contemporary good practices in software development for all systems in an enterprise, we need to fill in the gaps in tooling for automated testing and test-driven development. Change agents who gloss over this are only playing at “scaling” lightweight methods and good practices.

It is interesting to know that we can trigger testing outside CICS environment. Is it possible to test CICS maps also using this method ?

Hi Manikandan,

There are several ways to trigger CICS transactions from outside the CICS environment, and even from off-platform. Here’s a document that describes them: http://examples.oreilly.com/cics/CDROM/pdfs/cicsts/dfhtm00.pdf.

I think this post on the Mainframe Tips and Tricks blog provides a pretty clear description of how to invoke a CICS program using EXCI from batch: http://mainframe-tips-and-tricks.blogspot.com/2012/01/call-cics-program-from-batch-program.html.

Ultimately all these methods end up invoking a program using LINK or DPL. They don’t go through BMS. To test an application through the user interface, I don’t know of a way other than a screen-scrape approach. There’s a pretty straightforward way to do this using Ruby.

A Ruby gem called “cucumber” provides a very clean testing framework, and a gem called “te3270” provides an object-oriented abstraction of a CICS screen. This doesn’t give you a way to test the BMS map as such, but it does help you test the application through the UI, without having to bypass the UI and do a LINK.

Take a look at:

cucumber – https://github.com/cucumber/cucumber

te3270 – https://github.com/cheezy/te3270

To help you convert DFHMDF macros into te3270 text_field definitions, consider the gem “dfhmdf” – https://github.com/neopragma/dfhmdf.

It’s straightforward to write test scripts that screen-scrape 3270 screen applications using Ruby with these gems. You could use them to test CICS, IMS/DC (or IMS/TM), or ISPF applications through the UI.

Thank you so much Dave! It is very useful to me

I would be very interested in code isolation.

Many PL/1 and Cobol use static variables.

They do not use parms to call procedures and return parms.

This make testing procedures or pulling procedures into called modules hard.

Is there a tool that can generate parms and returned parms for procedures?

As far as I know, there is no such tool. The approach I took with Cobol Unit Test was to write a precompiler that inserts the unit test cases at the top of the Procedure Division, as well as some Working-Storage Section items that are used by the tool. The test cases can then invoke individual paragraphs in the program under test, and then make assertions about the values of Working-Storage and Linkage Section items.

That sort of approach is necessary for legacy code, which tends to consist of large, monolithic code units. For new code, it might be advisable to structure applications as numerous small programs that call each other and pass parameters. That sort of structure would greatly simplify the problem of test automation for Cobol. But there could be trade-offs in terms of maintainability and performance that must be considered, as well.

Regarding PL/I, I don’t know of any unit testing tools at all except for IBM’s zUnit. zUnit can run test cases against whole load modules, but doesn’t allow for isolating smaller code units than that inside of a PL/I or Cobol run unit.

Sorry I can’t offer a happier answer to your question!