Garlic is widely considered to offer significant health benefits. It’s also a delicious and versatile ingredient in foods. Would tomato-based pasta sauce be pasta sauce at all if you omitted garlic? (Ignore American-style fast-food pasta sauce for the moment. Canadians, before you smirk, I have just two words for you: Pizza-Pizza.)

Chocolate, as well, brings a variety of health benefits. It, too, is delicious and a versatile ingredient in foods. What would a chocolate bar be if you omitted the chocolate? (Ignore "white chocolate" for the moment. Come to think of it, just ignore "white chocolate" altogether.)

Logically, then, it follows that chocolate-covered garlic cloves must surely be among the healthiest and most delicious foods one could hope for.

But why stop there? Glass is a wonderful material that adds much to our modern way of life. There is even a form of biocompatible glass that helps broken bones heal. Clearly, glass is good for the body.

Logically, then, it follows that chocolate-covered garlic cloves with tiny shards of glass embedded in them must surely be a super health food as well as a fabulously delicious snack. What an amazing rainbow of flavors and textures in the mouth! Ah, the sultry contralto notes of the chocolate, the lingering bite of the garlic, the metallic tang of the blood. And all of that still but a prelude to the inevitable conclusion.

The same logic applies to the task of selecting tools and methods for developing application software. I recall one project in particular that illustrates this approach quite well. The company wanted to maximize their chances of delivering a high-quality, well-aligned, usable product in a reasonable time. They went in search of the Best Practices Ever for delivering software, and identified three Good Ideas. Then came the flash of insight that set the stage for success: Combining all three Good Ideas on the same project could only result in three times the Goodness!

Well, in theory, anyway. In the immortal words of American philosopher Lawrence Peter Berra, "In theory there is no difference between theory and practice. In practice there is."

The recipe for this project included three main ingredients plus several spicy seasonings (also known as organizational issues).

Ingredient 1: UX-Centered Design

The first ingredient was UX-centered design. "UX" stands for "user experience." User experience professionals are rational beings, and so of course they wanted to call it UE because "experience" doesn’t start with an X, but the University of Evansville, Illinois, protested on intellectual property grounds (not to mention the sunk cost of all those T-shirts on sale in the University bookstore), and so the user experience folks had to resort to the second letter of the word "experience" for their acronym. (Note to University attorneys: Humor is protected speech, even when it isn’t funny.) For consistency’s sake, they probably should have called it SX for uSer eXperience, but they didn’t think of that and now it’s too late. So, UX it is. The rambling, irrelevant, circular nonsense in this paragraph isn’t random; it’s meant to illustrate what it feels like to participate in a typical UX design session. (Spoiler alert: This is relevant to the way the work flowed on the project.)

On a more serious note, UX-centered design seeks to understand not only how people interact with systems but also why they do so. The idea is to understand the user’s work flow in context, so that user interfaces and system behaviors can be tailored to user needs in ways that result in a generally positive experience overall. We don’t want people to get tired or stressed out from using a system all day. UX goes beyond the well-known rules of thumb about user interface design, such as "Don’t do anything surprising," and drives design from a deeper understanding of people’s needs. Sometimes the guideline about surprises gets lost in the shuffle, but that’s okay because we’re going after Higher Goals here. (I said a serious note, not a whole song.) In general, UX-centered design qualifies as a Good Idea.

Ingredient 2: Extreme Programming

The second ingredient was an approach to software development known as Extreme Programming, or XP. The creators of XP are rational beings, and so of course they wanted to call it EP because "extreme" doesn’t start with an X, but they received a message by mysterious means informing them that EP was already in use as an acronym for Extrasensory Perception. In keeping with their own methodology, they iterated over the word "extreme" until they came to a letter that wasn’t E, and they used that letter because it was the simplest thing that could possibly work. Coincidentally, the acronym XP would one day be used for an obscure Microsoft product whose name I can’t quite recall, but they didn’t receive that information via EP and now it’s too late. So, XP it is.

It’s hard to cubby-hole XP as a process framework, a methodology, or a set of practices because it comprises elements of all three. It calls for a time-boxed iterative process with direct customer participation, incremental delivery, and frequent feedback. It includes practices for team collaboration and coordination of work. It specifies several software development practices that complement one another to help teams produce high-quality software that aligns well with business needs, all with a minimum of wasted time. XP is especially useful in cases when we need to explore the solution space in collaboration with our customer to evolve the most appropriate solution, when developing code from scratch. (Spoiler alert: Remember those conditions later, when reading about the characteristics of the particular project.) XP qualifies as a Good Idea.

Ingredient 3: Code Generator

While it is generally true that software development is a creative endeavor, it is also true that the majority of business applications are variations on a few common themes. Most interactive business applications are, in essense, CRUD applications that support a few business rules. (By CRUD I don’t mean crud, I mean Create-Read-Update-Delete.) Business rule support and non-standard external interfaces are the only custom code needed, and tools offer configuration options and define points in the request/response cycle where you can drop in modules to support these things. In addition, business applications support some kind of user interface. Good code generators can produce either thick or thin clients. Provided the generated application is fairly close to what you ultimately want to deploy (spoiler alert), a code generator can save time and cost and avoid defects in the code. Code generators qualify as a Good Idea.

On this project, they decided to use a tool from Oracle Corporation called Application Development Framework. ADF combines several other frameworks and packages, as well as custom code, to create a facility that can generate a fully-functional CRUD application based on a database schema definition. The generated application is in Java, runs on WebLogic, has the conventional Model 2 (MVC-ish) architecture, is integrated with the Oracle RDBMS, uses Java Swing for thick clients and Java Server Faces (JSF) for thin clients (with nearly 200 drop-in UI widgets), and has a pretty nice default look and feel that can be changed if desired. If you’re wondering why I haven’t made fun of ADF, it isn’t because I’m promoting the product. It’s because to a software developer there’s nothing amusing about a code generator.

Conflicting cooking methods

Generally speaking, there are a couple of approaches to cooking (building) an application. One is sometimes called inside-out or back-to-front design. We start with the core or foundational pieces (the "inside") of the application and build outward from there toward the external interfaces (the "outside"). Another way to express it is to say we start with the back end and build toward the front end. With this approach, we build up the code in horizontal layers corresponding to the architectural layers of the application based on a comprehensive design prepared in advance.

A contrasting approach is sometimes called outside-in or front-to-back design. We start with the externally-visible attributes of the application — its functional behavior, visible performance attributes, look and feel, and user experience — and build up the code necessary to realize those qualities. We start with the external interfaces (the "outside") and work toward the core pieces (the "inside"). Another way to express it is to say we start with the front end and build toward the back end. This approach can be used with either a comprehensive design or an incremental development method; the key point is that design is driven by the externally-visible behaviors of the application.

While all the key ingredients chosen for this project are Good Ideas in their own right, each one calls for a different cooking method. This makes it very difficult to combine them in any sort of recipe.

UX-centered design naturally calls for an outside-in approach to building the application. Developers usually take an adaptive approach to the design when they use an outside-in approach. However, historically UX specialists have tended to prefer doing a comprehensive design in advance. Their design choices are informed by empirical investigation of user preferences, often using wireframes, thin prototypes, or focus groups to collect feedback about the sort of user experience that would best meet people’s needs. It is feasible to take a UX-centric approach in combination with an incremental development method, but there are certain challenges involved, as Peter Hornsby describes in an article on the UX Matters website.

Extreme Programming is specifically intended to support iterative development and incremental delivery based on frequent customer feedback. The way to obtain that feedback is by demonstrating fully-functional increments of the solution at regular intervals. In effect, this requires us to take the outside-in approach to building the code. We have to create vertical slices of functionality that penetrate all the way through the solution architecture and that actually drive the evolution of that architecture incrementally. When we try to use XP or similar methods in conjunction with "big design up front," the process-related overhead for the demonstrations and iterative planning activities tends not to be repaid.

Oracle ADF depends on having a complete definition of the database schema in place before generating the application. The tool does not lend itself to an iterative/incremental development approach, because each change to the schema, however minor, forces us to re-generate the whole application. This quickly becomes tedious and frustrating for the people involved.

The three main ingredients have some inherent conflicts that cancel out their respective benefits. XP and ADF have the most significant, direct conflicts. The XP technical practices add no value when the application code is generated automatically. They are designed to support writing code from scratch. A code generator produces, for all practical purposes, pre-tested code. That’s one of the primary value-add attributes of that sort of tool.

At the same time, ADF and its embedded Java development environment, JDeveloper, are not designed to support incremental development. When we tried to test-drive code using that environment, we found that each local build took a minimum of four minutes. Imagine writing a small test case for a custom class that you intend to drop in at some point in the request/response cycle. You want to build and run tests to see if you have "red for the right reason." Just that bit takes four minutes or more. Then you add two or three lines of production code, and you want to build and run tests to see if your test case is now green. That bit takes another four minutes or more. Then you want to do two or three small refactorings, running the tests after each one. Each little step takes a minimum of four minutes. Imaging doing a whole project that way. Imagine the build times lengthening as the codebase grows. It is impossible to get into a state of flow…ever.

UX-centered design has inherent conflicts with XP. Because the application is subject to frequent change based on customer feedback from scheduled demonstrations or even from informal visits to the team work area, XP presents challenges for UX designers. The tools the designers use to create samples and prototypes of UI screens are in the nature of "art" tools. They are completely unlike "software development" tools. They are designed to enable the designer to create a highly detailed and polished visual representation of the UI; they are not designed to support rapid development of many alternative designs. To accommodate a fairly small change to the UI, the designer may have to do a considerable amount of re-work. There are ways to combine UX-centered design with adaptive software development, but they require specific and mindful attention; they don’t happen automatically.

UX-centered design has even more significant conflicts with ADF, or with any code generator. By nature, a code generator or UI framework produces a "standard" UI. Such a tool cannot include design considerations that were unknown at the time the tool was created. UX designers often craft highly customized UIs that are designed to create a particular experience for the people using the application. They include design elements that no one at the tool company ever imagined. It is literally impossible for a code generator to support completely new design ideas. Such a tool can only generate output that its creators envisioned and coded.

That is a significant advantage when we need to generate a standard, conventional application. It is a huge stumbling block when we want to create a hand-crafted UI to support a tailored user experience.

All frying pans are not created equal

The client’s architecture team had recommended Oracle ADF as a strategic toolset for the enterprise, with this project as one of the first to employ it. Management looked for an external team specializing in "agile" methods because that was one of the Good Ideas they had found. The two groups were destined to come into conflict.

When the external consultants explained to management that it was impossible to support the UX designers’ wishes using ADF, and impossible to practice "agile" development techniques using that tool, management asked for an alternative recommendation. The team recommended Java Server Faces (JSF).

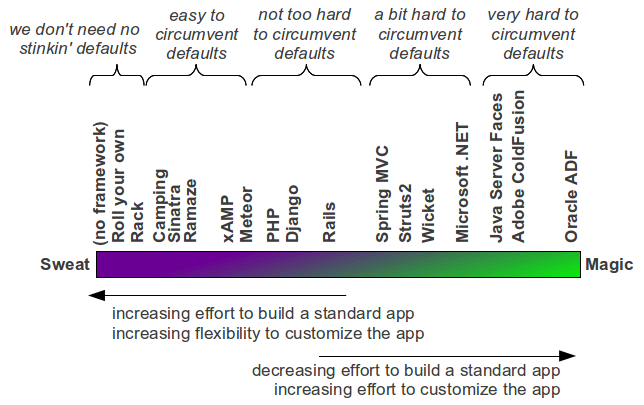

Let me digress for a moment. As I see it, webapp frameworks cover a spectrum of functionality ranging from "high sweat, low magic" at one extreme to "low sweat, high magic" at the other. When you know your solution will call for a significant amount of customization, you want to choose a framework from the "high sweat, low magic" end of the spectrum. These frameworks provide a minimum of out-of-the-box functionality and leave a high degree of freedom to create a custom solution. When you know your solution will be fairly standard and will not require much customization, you want to choose a framework from the "low sweat, high magic" end of the spectrum. These frameworks can generate most or all the application code for you.

A common mistake in selecting a webapp framework is to assume that having a lot of out-of-the-box functionality is automatically and universally a good thing. What people overlook is that when you have to customize the solution heavily or in ways the framework designers didn’t anticipate, it can be harder to circumvent or disable the built-in functionality of a high-magic framework than it would have been to write the whole application from scratch with no framework at all. At that point you’re not getting the value from the framework you were expecting.

(You might disagree with the placement of frameworks on this spectrum. Please bear in mind it is not the purpose of this post to debate that.) From the standpoint of customizability, replacing ADF with JSF was almost no change at all. Bear in mind when I say "customize" in this context, I don’t mean just setting the configuration options the various products offer. I mean making the thing behave differently than its designers assumed it should behave. When you find this is necessary at all, it might raise a red flag in your mind. It might suggest you haven’t chosen a framework that is appropriate to the need. Otherwise, you wouldn’t have to work around the framework. You might have to do some configuration, but you wouldn’t actually have to work around the framework.

Why did the consultants recommend JSF? Because it was the framework they had used on their previous engagement, so they were confident in their delivery estimate and felt they could make an appropriate fixed-price bid for the project based on that estimate. They didn’t consider what would happen when we tried to implement the UX designers’ cutting-edge UI ideas using a framework whose capabilities were limited to a boilerplate design approach. The client manager told us the day he had to go to the budget committee to ask for more funding was the single worst day of his entire career.

Through a long stretch of the project, we were spending around 90-95% of development time just making tweaks to the UI behavior in an attempt to implement the functionality the UX designers wanted. Meanwhile, the UX designers were becoming frustrated because time after time they had to back off from a design point when we discovered it simply could not be implemented using the selected technologies. They started to feel as if we would be unable to deliver a suitable user experience.

Not the only cooks in the kitchen

In the meantime, the architecture group did not enjoy the fact their own management had set aside their recommended tool in favor of one preferred by outside consultants. They had already assessed JSF, among other alternatives, before determining that ADF was the appropriate strategic choice for the enterprise. Besides that, in their view, ADF was JSF "on steroids." ADF wraps JSF, among other components. A rich and nicely-implemented set of JSF UI widgets is included. ADF generates apps that live in a ready-to-roll execution environment based on all the usual Java standards. To them, moving to JSF felt like taking a step backward. Two, maybe.

All along the way, the architecture group generated prototypes of the application that supported the functionality that had been defined to date. They were alert to every speed bump the XP team hit, and were quick to demonstrate working prototypes to management and stakeholders on each occasion.

The reason they could not get any traction to reinstate ADF as the tool of choice was that stakeholders and management were convinced of the importance of having a unique, cutting-edge, sophisticated user experience. They believed this would be an important factor for capturing market share and retaining customers. As a result, the UX design group had the greatest clout for controlling the direction of the design.

Was it necessary for that app to have a cutting-edge UI? It wasn’t and still isn’t my call to make. Maybe they had market research that told them it was necessary. I can only say the necessity was not obvious to me.

This was not a social networking app or anything of the kind. It was basically a data entry app. Users filled in a form to order an electronic product. The app would prepare the product in the form of a PDF file for download. There was a payment transaction involved. There were a couple of web service calls to other internal apps. That’s it in an nutshell, without divulging identifiable information about the whole thing. There was one especially interesting intelligent search facility under the covers, but that had no effect on the UI design. Our team didn’t get to write it, anyway.

In my view (which doesn’t count), the people who would use the app really couldn’t care less about fancy-schmancy user interface behavior. If anything, the fact that sections of the form automatically disappeared and reappeared while you were using the app seemed likely to cause confusion. In fact, most of our acceptance criteria had nothing to do with the base functionality of the app. They had to do with whether various UI elements appeared, disappeared, lit up, went gray, expanded, or contracted under the right conditions. For a data entry app that an office worker would use over and over again all day long, that sort of thing doesn’t add value. What adds value in that context is a simple, predictable, keyboard-friendly app in which UI elements don’t change position on the screen or disappear altogether.

ADF’s default look and feel isn’t bad, and they could brand the thing using ADF’s weird (but functional) "skinning" feature. They could have gotten this bad boy out the door in a matter of weeks. In hindsight, the client’s architecture group had it right all along. Of the three Good Ideas, the one that fit the particular situation best was the code generator.

So what?

The primary "so what?" in this story is that piling on a whole bunch of Good Ideas isn’t necessarily a good idea. You have to understand the potential for the various Good Ideas to interfere with one another. The most appropriate Good Idea in any given situation depends on the context.

A secondary "so what?" is that the tools you used on your last job, or the ones you happen to be familiar with, or the ones you’re interested in learning just now, might not necessarily be the best ones for your next project.

Best advice I can offer is: Don’t eat the broken glass if you can avoid it. If you can’t avoid it, then, well, dip it in chocolate or something, and good luck.

Windows XP came along after eXtreme Programming.

Thanks, George. I covered my mistake with clever re-wording. No one will be the wiser.

Wow – ADF. The acronym goes back to the desktop/green screen Oracle Forms days. I used to work for them and use Oracle Designer. It would generate out a whole Forms app from the database design and some defaults. One of their mantras was “100% generation”. At the time I thought this was a great idea. Most enterprise apps are very repetitive in nature and this takes a lot of the pain out. Later versions of OD would generate out web based apps using Oracle PL/SQL and the application server that fronted the database.

This only works well if the database doesn’t change often, or you’ve got a green field and want to get something up quickly. It doesn’t work that well incrementally because it’s hard to throw irrelevant stuff away. As soon as you get humans (or money) in the mix, with their damned tendency to start wanting exceptions and alterations, you’re lucky to get 80% :).

Another interesting thing is that these days I mostly do Rails work. The experts don’t use the generators at all. What the generators do is wrap your database in a very brittle API, mostly because Active Record surrenders completely to the database. There’s been a lot of talk recently about not using a persistence layer at all in the early stages of development until you become sure of the model, which I suppose moves us closer to the UX ideal. Avdi Grimm http://devblog.avdi.org/ has been writing about this a lot. It’s usually the second iteration, after delivery, when customers want things to be ever so slightly different, that this monolithic API approach starts to break.

Great post though, the chocolate covered glassy garlic, anyone remember the corporate best of breed thing from the 90’s? The one which Oracle ridiculed by making models of the 747 with the shuttle engines?

Francis,

Wow – Oracle Forms. Yeah, I remember that.

There has always been interest in simplifying development by generating the routine parts of apps, and sometimes whole apps. I’ve worked with quite a few generators, CASE tools, and so forth. Things like Telon and Rational Rose, etc. There’s a place for them. Every app doesn’t necessarily require experts.

It’s easy to subtract ActiveRecord from Rails if you don’t need it. In corporate environments, business apps often don’t access databases directly anyway. They go through an ESB or similar facility. I’ve found that with highly flexible tools like Rails, it’s feasible to tweak the boilerplate code so that when people use the generators they get a skeleton app that meshes with the organization’s coding/architectural standards. This is a good fit in corporate IT environments. You can ensure the non-experts won’t forget to include hooks for business activity monitoring or logging etc., and that all apps will be branded the same way.

The past few years I’ve been thinking that we ought to reconsider the way we structure organizations to support IT needs. SOA offers a clean approach to separating centrally-managed strategic IT assets like CRM, ERP, BRU, Data Warehouse from line-of-business-specific tactical assets like business apps. When writing strategic code, we need the old familiar design goals of maintainability, reusability, and performance. When writing a tactical business app, we need a different design goal: Easy, cheap, and rapid replacement. Code generators are a good fit for that, both because of the tactical nature of business apps and because of the difficulty in finding enough “experts” to go around. We might see yet another rise in demand for that sort of tool in the next few years.

In the story about UX, XP, and ADF, the client company’s primary business asset is its collection of data. Therefore, the products closest to the data are strategic assets for that company. Applications that provide services to customers based on the data are, themselves, not strategic assets; they are tactical solutions. That’s the key business reason I think ADF is a good fit there. When the schema changes you could say, “Oh no! We have to re-generate the app!” or you could say, “Oh, boy! All we have to do is re-generate the app!” depending on how you look at it.

I am by no means suggesting that all programming should be done using code generators! Other companies will have different needs and priorities.

I haven’t heard of the 747 with shuttle engines. It’s funny to think of Oracle poking fun at anyone else for overselling “enterprise-class” products. 747 with shuttle engines is a good metaphor for it. I’ve used the metaphor of the SR-71 Blackbird in a similar way: http://wp.me/p1CUP0-T

Thanks for the comment!