This is the second of three posts dealing with aspects of management that I consider significant in choosing management techniques and metrics for software development and support:

- Iron Triangle management

- Process model (this post)

- Delivery mode

In my experience, no two organizations, and no two teams within the same organization, use exactly the same process model for software delivery; nor do they keep the same process intact forever. Everyone tailors their process to their own needs and to the realities of their own situation. In addition, most organizations use more than one process, depending on the nature of each particular initiative or work stream.

That is as it should be. Yet, despite the many variations, there are common patterns that can help us understand how we might plan and track the progress of software development initiatives and support activities in ways that help us make management decisions, as opposed to merely following a published guideline for planning and tracking.

My observation is that there are four basic models for software delivery. We can think of these as reference models, in that people don’t actually use any one of them in pure form. Although reference models are not used directly, their definitions provide usefully simple expressions of key concepts. They are:

- Linear

- Iterative

- Time-boxed

- Continuous flow

The tailored processes I mentioned, as well as any number of branded and/or commercial methodologies, borrow elements from two or more of these reference models. Furthermore, real-life processes emphasize different aspects of the work depending on the particular problems of interest in a given context, such as risk mitigation, alignment with stakeholder needs, software quality, or time to market.

Even considering all that, it seems to me that each real-world process leans more heavily toward one of the four reference models than the other three. By understanding that, we can choose metrics and other management tools appropriate to the situation at hand, regardless of buzzwords, labeling, or “belief” (or wishful thinking) about how the work is really being done. As I mentioned in part one of this series, people will say whatever they think they need to say about the process they are using, as buzzwords wax and wane in popularity. It’s more interesting to observe what people do.

Linear model

The linear model of software development is based on the premise that software is best developed (or can only be developed) by working through a series of discrete steps in a specific sequence. The steps are usually defined as the following, or as a variation on the following:

- System requirements

- Software requirements

- Analysis

- Program design

- Coding

- Testing

- Operations

The list above is adapted from Winston Royce, “Managing the Development of Large Software Systems”, in Proceedings of IEEE WESCON 26 (August 1970): 1–9.

The linear model is sometimes called the System Development Life Cycle (SDLC), or other words that can “spell” SDLC. Some people refer to it disparagingly as the “waterfall” model. Whatever you choose to call it, the fundamental assumption is that software can and should be developed by taking the work serially through a sequence of steps such as those listed. Ideally, a single pass through the steps will yield a good outcome. When it doesn’t, the assumption is that someone made mistakes in doing their portion of the work during one of the earlier steps.

It’s a commonplace to hear that “nobody” uses the linear model in real life anymore. In my experience, that is not the case. Many organizations do base their formal delivery process on the fundamental assumptions of the linear model. While they have change control processes and defect repair processes in place, those are considered to be corrective actions that “shouldn’t” be necessary, if only everyone would just do their jobs correctly the first time.

The assumption that linear development is a valid approach is very, very prevalent. For that reason, nearly all real-world process models have this assumption built in at a deep level, even if they are formally structured around one or more of the other three reference models. Therefore, we cannot ignore the linear reference model in our assessment of real-world models, or we risk overlooking something of importance.

The other process models can work with either approach to Iron Triangle management. However, a strictly linear model precludes an adaptive approach. Even when some measure of flexibility is introduced by defining change control procedures, when the mentality is based on the linear model then the change control procedures are usually so cumbersome that an adaptive approach is impractical. A common symptom is that lead times tend to be measured in months or years even for relatively trivial initiatives.

Iterative model

An iterative model assumes that a single pass through the canonical development steps will not result in a good outcome because it is infeasible to know everything about the solution in advance, and because unexpected events and new learning will inevitably occur despite the most careful up-front planning.

I don’t want to give the impression that these models evolved serially, but in some cases the evolution of a model has been informed by the problems people experienced with earlier models. When the industry moved toward iterative models, the move was prompted in part by particular problems people had experienced in applying the linear model. Specifically, the key problems were very long lead times and poor alignment with stakeholder needs.

By revisiting the requirements repeatedly, or iterating over the requirements, people sought to bring products to market in less time and align the functionality more closely with stakeholder needs. The key philosophical difference between the iterative and linear models is that the iterative model does not assume the reason changes occur has to be that someone made a mistake in an earlier phase. Learning and change are considered normal.

There are more variations and more examples of iterative methods than any other kind. Some iterative processes call for passing through all requirements in each pass and adding depth or refinement to the functionality in each pass. Others call for delivering high-value features early and in a complete form, and then delivering additional features in subsequent iterations. Still others call for developing a subset of functionality such as a prototype and then refining the design based on feedback from stakeholders. The simplest form of an iterative model simply repeats the linear model multiple times, biting off a subset of the scope each time.

Different methodologies based on the iterative model have different priorities. Some are intended to improve time to market or business alignment, some are intended to manage risk effectively, and some are intended to assure high quality in terms of some key criterion such as defect density or usability. So, methodologies may differ in their details, but any iterative process has the characteristic that a single pass through the requirements is not expected to result in the final version of the product.

Time-box model

A time-box is a predefined time period within which a given set of objectives is to be met. In the event the objectives are not met, we do not extend the time-box to allow for completion. Instead, we try to adjust our practices such that we can complete the next set of objectives within the limits of the next time-box.

At the risk of suggesting process model evolution has been serial, I will observe that the concept of the time-box was a response to problems people experienced in applying the iterative model. While iterations did help solve the problem of poor alignment with stakeholder needs, many software delivery projects had difficulty staying on schedule.

The basic iterative model does not require anything to be delivered to production or to the market in each iteration, although some of the specific methodologies based on the model do so. There was still a tendency, as with the linear model, to extend timelines whenever there were challenges in delivering the expected functionality within the expected time. Of the three dimensions of the Iron Triangle, schedule seemed to be the most susceptible to unplanned change.

One answer to this problem was to define fixed time intervals within which working software had to be produced. The idea was to force teams to find ways to get a set amount of work done by a set time, again and again. The main purpose was to avoid the problem of schedule slippage.

Methodologies based on the time-box model usually resemble Matryushka dolls, with time-boxed activities layered one inside another. A fixed release time-box contains two or more fixed iteration time-boxes. Each iteration contains two or more working days, which are treated as time-boxes for certain purposes. Each regular working day within an iteration may contain still smaller time-boxes to drive fine-grained technical activities, similar to (or based directly on) the Pomodoro Technique for personal time management.

An iteration may also contain time-boxed activities for touching base about progress and impediments, short-term planning, stakeholder demonstrations, and team-level process improvement exercises. Different methodologies based on this model prescribe different time-boxes and different specific activities, but the general idea is that the time-boxes limit the time available to complete specific packages of work with the overall goal of delivering a production-ready increment of the solution in each iteration.

A side effect of the time-box approach has been that scope became the dimension of the Iron Triangle that was most susceptible to unplanned change. The old question, “When will all the features be delivered?” was often replaced by a new question, “How much functionality will be delivered by the release date?”

Continuous flow model

The continuous flow model borrows, adapts, and combines concepts from a number of sources, including Theory of Constraints, the Toyota Production System, Lean Thinking, pull systems, Complex Adaptive Systems, and Systems Thinking. The basic premise is that software development is a value stream whose purpose is to deliver something of value (a capability supported by a software product) to a customer. The customer defines the meaning of value.

Let me reiterate that these models did not evolve serially, but it may be a useful oversimplification to say that people turned to the continuous flow model because of problems they experienced in applying the time-box model. Any process we might use will incur a certain amount of overhead just to satisfy the formalities of the process itself. That is not a problem, provided we are getting value in return for the overhead. As teams and organizations become increasingly proficient with time-box methods, the overhead of planning and managing the various time-boxes yields proportionally less value.

Some teams and organizations reached a point that they were spending proportionally too much time on process-related overhead activities. Rather than shortening time to market and improving alignment through frequent stakeholder feedback, the time-box model was hindering delivery by imposing too much ceremony on day-to-day work, and interfering with stakeholders’ own work flow by asking them for too-frequent participation in formal feedback activities involving very fine-grained solution increments.

One answer to this problem has been to dispense with the fixed time-boxes and instead focus on keeping a small number of discrete work items flowing smoothly and steadily through the process from start to finish. Methodologies based on this model draw heavily on Theory of Constraints and Systems Thinking. The key concepts are:

- Focus on customer-defined value

- Keep the work moving steadily

- Eliminate waste from the process

Representative examples

Quite a few branded software development methodologies have been created over the years. Most of them are hybrids that combine ideas from two or more of the four basic reference models, and most were developed in the trenches in response to the needs of a specific software development project before being promoted as general solutions.

Most software development organizations officially use one or more branded or home-grown methodologies that exhibit a mixture of characteristics from two or more of the reference models. Within those organizations, individual teams or working groups adapt the methodologies to their own needs. There are many, many variations.

To help us understand management practices and metrics that make sense in a given context, it is helpful to break down the methodologies we see around us in terms of the inherent characteristics of the four reference models. Here are a few examples of commercial or branded methodologies to give you an idea of what I mean.

Please bear in mind these are not meant to be complete descriptions of the various processes or methodologies; they are only illustrations of the reference models. If you want detailed information about any of these processes, please refer to their respective official websites or other information sources.

In addition, please bear in mind the list is not intended to include every process framework or methodology that was ever conceived. It is only a list of examples to illustrate the reference models. There are other noteworthy methods, such as Thoughtworks’ method (which has no formal name, as far as I know), Industrial XP (created by Joshua Kerievsky and promoted by Industrial Logic), and the Crystal Methodologies (created by Alistair Cockburn). Most of them represent some variant of the Iterative or Time-box reference model, and we already have several examples in that category.

Finally, please bear in mind that the descriptions are not intended as criticisms or comparisons. They are only meant to point out how each process maps to one or more of the reference models. There are no value judgments here with regard to whether one process is “better” than another. The point is to be able to recognize the salient characteristics of the process actually in use in any given organization so that we can make appropriate context-aware choices of metrics and other management tools and techniques.

Anderson Foundation Method/1: (Linear model)

In the mid-1980s, I worked on a project that used a methodology called Anderson Foundation Method/1, a product of Anderson Consulting. Anderson has since been incorporated into Accenture, and that company still markets a product line with names like Foundation something/1, although these are considerably evolved from their 1980s-era ancestors.

The version I used long, long ago was based on the strict linear process model. It was very specific to the Cobol programming language and a hierarchical database management system from IBM called IMS/DB. Most of its documentation artifacts spelled out very detailed specifications for IMS-specific implementation details. It was typical of the formal software development methodologies of the era in that it was firmly grounded in the linear model and was closely tied to specific technologies.

V-Model: (Linear model)

The V-Model was first elaborated at Hughes Aircraft in the early 1980s in connection with a proposal for a major FAA project (see Wikipedia article for more history). The V-Model emphasizes the importance of functional and non-functional verification at all levels of system design and creation, including projects that involve both hardware and software development.

Conceptually, the V-Model looks a bit like this:

As requirements are elaborated at each successive layer, the corresponding verifications are also defined. One way to think of the V-Model is as an early incarnation of the concept of specification by example or acceptance-test driven development, except that in those days the tooling did not exist for executable specifications. Once the details have been elaborated, both on the functional side of the V and on the verification side, then development proceeds, with the verifications carried out at each level of detail all along the way.

Spiral Model: (Iterative model)

Barry Boehm invented the Spiral Model in the mid-1980s and published it in “A Spiral Model of Software Development and Enhancement”, ACM SIGSOFT Software Engineering Notes, ACM, 11(4):14-24, August 1986.

The basic idea is to build the product through a series of iterations through design and prototyping activities, gradually adding functionality and design refinements with feedback from stakeholders.

A diagram of the Spiral Model is available at http://qualityguru.files.wordpress.com/2010/08/sm2.png

You should not assume that iterative models necessarily imply rapid development. As originally envisioned, the Spiral Model called for iterations of 6 months to 2 years in length. It was, in part, a response to the fact that large-scale software development projects were so long that they tended to drift away from customer needs by the time they were finally delivered. By demonstrating gradually-refined prototypes at regular intervals, the goal was to keep development aligned with needs over the course of very long release cycles.

Projects using the Spiral Model need not define iterations of such long duration. The model itself does not depend on any particular iteration length.

The Spiral Model addresses problems caused by very long release cycles, but does not explicitly break with traditional notions of SDLC-style development steps within each iteration.

You may be accustomed to thinking of “iterative” as implying “time-boxed,” but this is not so. As with most iterative methods, Spiral does not call for a production-ready solution increment to be delivered in each iteration. It only calls for a prototype. Prototypes are, by definition, meant to serve as examples and to be discarded. They are not meant to become the basis for the “real” code.

Rational Unified Process: (Iterative model)

The Rational Unified Process (RUP) was created by Philippe Kruchten at Rational Software in 1996, based in part on the Objectory process the company had acquired from Ivar Jacobson. IBM acquired Rational Software in 2003, and still sells RUP. It is one of the most widely-used and best-known software development process frameworks.

This image comes from Wikipedia:

RUP is not a prescriptive methodology, but rather a customizable process framework specifically designed to support software development activities. Depending on the characteristics of a particular project, the team may elect to use all or only a subset of RUP practices and artifacts.

The main focus of RUP is on risk management, rather than on technical practices or product lead time.

RUP is firmly rooted in the SDLC mentality in that it defines four discrete development phases: Inception, Elaboration, Construction, and Transition. The four phases map naturally to typical SDLC process steps.

The RUP model cross-references specialized activities, which it calls disciplines, with the four phases, including Business Modeling, Requirements, Analysis & Design, Implementation, Test, and Deployment. Thus, RUP maintains traditional thinking regarding the need for expert specialists in various aspects of software development.

A unique feature of the RUP model is that it suggests the relative workload within each discipline at each phase of development. This is shown in the diagram by the thickness of the colored regions that represent the contribution of each discipline as the team progresses through the four phases.

RUP as a commercial product includes software tools to maintain a common repository of project information so that the various artifacts will all be based on the same version of the same information. This is a way of mitigating the risk of miscommunication between specialists.

The four phases may be carried out within each iteration, or there may be multiple iterations for each phase. This is up to the project team or project management. As with most iterative processes, RUP does not require the delivery of production-ready solution increments in each iteration, or even with each pass through the four phases.

Evo: (Iterative model)

Evolutionary Project Management, or Evo, was developed by Tom Gilb. It is a holistic, engineering-focused approach that aims to deliver the maximum value to all stakeholders of a project. It is based on ten basic principles (see http://nrm.home.xs4all.nl/EvoPrinc/index.htm):

- Real results, of value to real stakeholders, will be delivered early and frequently.

- The next Evo delivery step must be the one that delivers the most stakeholder value possible at that time.

- Evo steps deliver the specified requirements, evolutionarily.

- We cannot know all the right requirements in advance, but we can discover them more quickly by attempts to deliver real value to real stakeholders.

- Evo is holistic systems engineering – all necessary aspects of the system must be complete and correct – and delivered to a real stakeholder environment – it is not only about programming – it is about customer satisfaction.

- Evo projects will require an open architecture – because we are going to change project ideas as often as we need to, in order to really deliver value to our stakeholders.

- The Evo project team will focus their energy, as a team, towards success in the current Evo step. They will succeed or fail in the current step, together. They will not waste energy on downstream steps until they have mastered current steps successfully.

- Evo is about learning from hard experience, as fast as we can – what really works, and what really delivers value. Evo is a discipline to make us confront our problems early – but which allows us to progress quickly when we really provably have got it right.

- Evo leads to early, and on-time, product delivery – both because of selected early priority delivery, and because we learn to get things right early.

- Evo should allow us to prove out new work processes, and get rid of bad ones early.

A diagram of Evo is available at http://www.gilb.com/tiki-page.php?pageName=Evolutionary-Project-Management

Evo emphasizes building truly complete solution increments in each iteration, with well-designed architecture and full support for non-functional requirements, and providing value to all stakeholders. It also takes a more expansive view of the meaning of “stakeholder” than most other processes.

Scrum: (Time-box model)

In 1986, Hirotaka Takeuchi and Ikujiro Nonaka described a holistic or rugby approach to product development, in which a cross-functional team created a new product collaboratively, passing the ball to one another as their various skills were needed. The idea gained wider recognition with the publication of the 1991 book, Wicked Problems, Righteous Solutions, by Peter DeGrace and Leslie Stahl. Shortly after that, Jeff Sutherland, John Scumniotales and Jeff McKenna at Easel Corporation, and Ken Schwaber at Advanced Development Methods crafted a process framework based on the rugby approach and named it Scrum. Since the early 2000s, Scrum has become one of the most widely-used process frameworks for software development, superceding RUP in general popularity.

A diagram of Scrum is available at http://www.mountaingoatsoftware.com/scrum/overview

Scrum calls for a cross-disciplinary team comprising whatever skills and knowledge are necessary to complete the objectives of the project. It defines specific roles that have specific responsibilities with respect to the process as such. Scrum requires that a production-ready solution increment be delivered in each iteration. However, it does not prescribe any particular practices or methods for the team to use. Ken Schwaber has described Scrum as “a lightweight management wrapper for empirical process control.”

Extreme Programming: (Time-box model)

Extreme Programming (XP) was developed in the trenches of a corporate development project, and was not created as an academic exercise or theoretical model. As such, it is a very no-nonsense approach to software development. For the same reason, it is strongly focused on a single team carrying out a single project.

In 1996, Kent Beck became the leader of a project at Chrysler to develop a payroll system. The project was known as C3, short for Chrysler Comprehensive Compensation System. XP did not create anything completely new. The basic idea was to put together proven-effective software development practices from the past and “turn all the knobs up to ten.”

XP combines a timeboxed iterative process model with a set of software engineering practices. It is unique in that it specifies particular development practices and not just a management framework.

A diagram of XP is available at http://osl2.uca.es/wikiCE/index.php/Extreme_programming

The Matryoshka doll metaphor applies very nicely to XP. The cited diagram shows the various feedback loops built into the XP process model. From seconds to minutes to hours there are no explicit time-boxes. From one day to days to weeks to months, each loop adheres to a predefined time-box. In practice, many teams define explicit time-boxes for the smaller loops, as well, as a way to manage their work flow.

Like Scrum, XP calls for a production-ready solution increment to be delivered with each iteration.

Kanban Software Development: (Continuous flow model)

The term kanban has become popular in software development circles in the past few years. Unfortunately, it has two different meanings. In a broad sense, kanban is a change management method for effecting process improvement and organizational transformation. In a narrower sense, a software development process based on continuous flow is also known as kanban.

The basic idea of kanban as a software development process is to assure smooth work flow by controlling the level of work in process (WIP). The main mechanism to provide high throughput is to adjust the WIP limits in specific steps of the process, guided by principles of the Theory of Constraints and Lean Thinking.

The kanban software development process came along at a time when the popularity of the time-box model was at an all-time high. One of the challenges people faced in applying the time-box model was the difficulty of producing a truly production-ready solution increment in a short iteration. While iteration length in the Spiral model was originally measured in months, by 2010 most teams were running iterations of one or two weeks’ duration. It seemed as if we were running into an inherent boundary of the time-box model; once you drive down the iteration length to a certain level, it becomes significantly more difficult to adhere to the model.

To address this issue, the kanban development method uses the concept of cadence. A cadence is a regular rhythm or heartbeat for the work, but isn’t treated as a time-boxed iteration. By decoupling the development cadence from the release cadence, teams can delivery a production-ready solution increment in each release without necessarily having to do so within the scope of a much shorter development cadence.

Naked Planning (Continuous flow model)

The lightest-weight process model I have seen was developed by Arlo Belshee when he was running a software startup incubator. The goal was to take a new idea from concept to cash (at least one real sale) in six weeks. To meet this ambitious goal, developers needed a process model that wasted no time in process compliance overhead. The result was this Lean-based model, called Naked Planning.

If this description is wrong, it is my fault. It is based on my memory of a conversation with Arlo a few years ago. Hopefully the description will be adequate for the purpose of this blog post, which is only to provide a few summary examples of real-world processes that illustrate the four reference models.

A new idea at the time was the MMF, or minimum marketable feature. Since the goal was to realize a sale no later than six weeks from project inception, the unit of product represented the smallest subset of functionality that a real customer would be willing to pay for. What was unique about this was the fact a deliverable was defined by the customer’s willingness to purchase (which is the most definitive expression of customer-defined value), and not by criteria such as level of effort, estimated development time, technical complexity, or budget allocation (all of which are of interest to development teams, but not necessarily to customers).

Naked Planning is a single-piece pull system. Given a prioritized list of MMFs, the development team takes the top item in the list and builds it. Whether the item is large or small makes no difference; it is the next-most-important thing to do from the customer’s perspective, so the only activity that focuses on “value” is to build that item. Thus, Naked Planning as such does not directly deal with planning activities upstream from development. In that sense you might think of it as a partial process.

Staged Delivery (hybrid of Linear and Iterative models)

The idea of staged delivery was proposed by different luminaries in the field of software methodologies, including (independently) Tom Gilb and Steve McConnell. It is essentially a linear SDLC model with one adjustment: Once the high-level, initial work has been completed, the detailed development work of low-level design, coding, testing, and deployment is repeated through two or more stages. Thus, at the “front end” of a project it is a plain linear SDLC process, and at the “back end” it is an iterative process.

Staged delivery embraces the reality that “[t]he existence of the software artifact changes the requirements for it.” (see C2 wiki: http://c2.com/cgi/wiki?EvolutionaryDelivery)

Importantly, each staged delivery provides real business value to stakeholders. The software is put into production and used for real work. Feedback from stakeholders informs the plan for the next release, and is based on real-world use of the product rather than on contrived use in a test environment.

DSDM (hybrid of Linear and Iterative models)

The Dynamic Systems Development Method (DSDM) represents another way in which elements of the linear SDLC model and elements of the iterative model can be combined.

In this case, each iteration does not result in any portion of the product in a fully-elaborated and production-ready form. Instead, the iterations represent major milestones in an SDLC-style development process.

The iterations provide opportunities for feedback, but with a different focus than in the case of staged delivery. With DSDM, a prototype is created and used to solicit stakeholder feedback. Incorporating that feedback into the next iteration, a more fully-elaborated prototype is developed and used to solicit further feedback. Ultimately, the “real” product is delivered.

SDLC elements include the sequential nature of the process and the emphasis on end-of-iteration reviews and sign-offs. Iterative elements include the delivery of specific artifacts per iteration and the emphasis on customer feedback to drive improvements in the design.

MSF (hybrid of Linear and Iterative models)

The Microsoft Solutions Framework (MSF) is a model some people refer to as an iterative waterfall. A large program is broken up into a series of smaller projects. The program is completed by delivering each of the smaller projects in turn. This avoids many of the problems inherent in the linear SDLC model when it is applied to large programs.

A diagram of the MSF is available on Microsoft Technet at http://technet.microsoft.com/en-us/library/Bb497060.ors01_02_big%28en-us,TechNet.10%29.gif.

Each of the smaller projects is carried out in an SDLC style. If you unroll the diagram on the slide and lay it out flat, it would look like the canonical “waterfall” model.

Scrumban (hybrid of Time-Box and Continuous flow models)

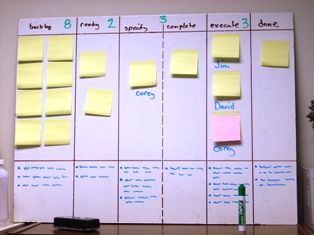

“Scrumban” is a term coined by Corey Ladas to describe a Scrum process that evolves by incorporating ideas from Lean Thinking and the Kanban software development approach. There is no rigid definition of scrumban. It is a blending of Scrum and Lean concepts and techniques.

Source: Lean Software Engineering

In the illustration, note the board is not a kanban board, strictly speaking, as there are no queues between the value-add steps, and yet it does show WIP limits, as a kanban board would do. What happened is that a Scrum team added WIP limits to help themselves see how the work was flowing, and to help them improve their delivery effectiveness.

A useful aspect of scrumban is that it represents a pragmatic approach to improving a defined process model.

Implications

A common error is to assume that the metrics associated with a particular process model (if any) are always applicable when that process model is used. In my experience, the choice of metrics depends on the combination of the approach to Iron Triangle management and the process model. In some cases, the delivery mode (covered in the next post) may also influence the choice of metrics, but it is less significant.

For example, Scrum is currently one of the most popular process models for software development work. It is an example of a time-box process model. Due to its popularity, Scrum is used in a very wide range of contexts and domains. The majority of teams using Scrum plan their work by sizing the work items in terms of an arbitrary, relative point system which they call “story points,” “T-shirt sizes,” “units,” or a similar name. The number of such units the team can produce in a single iteration (called a “sprint” in Scrum parlance) is the team’s velocity. By making empirical observations of velocity, one can project the team’s likely future performance by drawing a trend line from a series of observations.

A forward projection of performance based on a series of observations is a typical planning approach when we are doing adaptive development. We are focused on producing the best possible outcome in view of reality, rather than on completing a predefined scope within a predefined time frame. Besides that, when doing adaptive development we do not have a stable definition of 100% at the outset, so there is no meaningful way to use any sort of “percentage complete” metric. (That doesn’t stop people from using them in non-meaningful ways, of course.)

However, forward projection based on empirical observations is not appropriate when we are doing traditional development; that is, when approach (a) as defined in the previous post is used for Iron Triangle management. For traditional development, we need to detect variances from plan, because the plan is the definition of “correct.” To do that, we typically use some flavor of “percentage complete” measurements. In this case, a metric such as velocity is meaningless. Hence the importance of Iron Triangle management in choosing appropriate metrics.

What does “percentage complete” have to do with Scrum? Although it was originally conceived as a framework to support adaptive development, it turns out that Scrum is used far more often in conjunction with traditional methods than it is with adaptive methods. Because people assume velocity is a metric that automatically applies to Scrum, they try to use it in a traditional context. This often causes confusion, as the measurements are not meaningful in that context.

I’ve seen teams (or their managers) redefine “velocity” in terms of “percentage complete,” simply because it’s the only way they can make sense of the numbers they are able to obtain. The adulterated terminology then leads to further confusion about what the metrics mean; they are measuring percentage complete but calling it “velocity,” which confuses people who know what “velocity” means.

The real solution to this problem is to align metrics (and other management tools and techniques) with the methods people are really using, regardless of the buzzwords with which they label those methods. Labels are not reality.

In the next post, I will cover the third aspect of management that I think has significance for the ways in which we run software development initiatives: Delivery mode. That is a term I coined to distinguish between the discrete project model and the continuous support model of delivering software. Delivery mode has very significant implications for the approach to portfolio management and program management, but only limited impact on line management choices such as metrics to track progress.

![[diagram of V-Model]](https://neopragma.com/wp-content/uploads/2012/02/v-model-t.png)

![[diagram of V-Model]](https://neopragma.com/wp-content/uploads/2012/02/rup-t.png)

Nice summary, although suffering from some key omissions. For example, one whole category of process model, namely SBCE (Set-based Concurrent Engineering aka Network Model or, as Grant liked to call it, the Braided River).

And I believe FlowChain deserves at least a mention under “Continuous Flow” models (being, in many ways, the archetype of this category).

HTH

– Bob @FlowChainSensei

Hi Bob,

Thanks for your comment.

A summary, by its nature, will have some key omissions.

I have no direct experience with SBCE, so I may be quite mistaken in my interpretation. From what I’ve been able to learn about it in a short time, I’m not sure that SBCE is at the same level of abstraction as models like linear, iterative, etc. It seems to be at a higher level of abstraction. If so, then it would be a synecdoche to compare SBCE directly with process models.

One could apply SBCE in a situation where different teams, working simultaneously on different avenues (as in “consciously explore alternatives,” “consciously enable alternatives,” and “carry forward with multiple implementations,” as described in http://xp123.com/articles/set-based-concurrent-engineering/), used different process models. The use of different processes or methodologies by each team would not interfere with the overarching SBCE approach at all. Therefore, SBCE doesn’t appear to be yet another incarnation of the same type of thing as the process models used by the different teams. If it were, then it would be impossible to use other process models in conjunction with it without “breaking the rules.” Since that is clearly possible, and indeed fairly easy, it doesn’t seem as if SBCE is just another process model. It seems more like an overarching approach. As such, it doesn’t fit in this taxonomy.

No doubt FlowChain and many others could be mentioned under this or that category. Remember that my intention was not to list every process model anyone has ever thought of. I only wanted to provide a few examples of familiar processes that illustrate the four reference models. Most people have heard of RUP, Scrum, Spiral, V-Model, etc., so they can relate to those examples. I want to avoid the slipperly slope of adding everyone’s favorite to the list, one by one. That isn’t the purpose of the post.

As I see it, you either build software linearly or not. If “not,” then there are only a few truly different ways to do it, and even those are not so terribly different from one another. The numerous branded and/or commercial process frameworks out there are variations on just a few themes; as few as three, if we distill the details down to the essence. All the other differences between process models are a question of emphasis (risk, quality, time-to-market, etc.), scope (program-level, project-level, team-level, etc.), or technique (iterative waterfall, test-driven development, dimensional planning, etc.)…well, and differences in buzzwords, of course. They aren’t differences in essence.

What I’m interested in are the pragmatic implications of the ways in which people carry out the work. The theoretical foundations of the “pure” models are useful as references, but few people (if anyone) actually use any of these frameworks or methodologies in its pure form. The essential four reference models tell us enough to guide our choices when managing software development and support activities, and help us avoid getting lost in the details of the many branded variations and the even more numerous home-grown tweaks…the Scrum-buts, Spiral-buts, V-buts, RUP-buts, Kanban-buts, and XP-buts. No doubt there are SBCE-buts, too. The key is to be able to recognize which of the reference models anyone’s particular process framework most closely resembles. When we can do that, we can see clearly. To do it we need less detail, not more; fewer essential variations, not more.

Cheers,

Dave

[…] Process models […]

Wow. Dave that’s a pretty serious laundry list of processes you just added a few to my mental list. I will add this is as reference in my CSM course.

Mark, thanks very much for the kind words. Coming from someone as knowledgeable as you, it means a lot.

[…] into a model that considers software delivery processes along three axes: Iron Triangle management, process model, and delivery mode. Should I condemn the me of 2005 for failing to leap ahead in time to a more […]

[…] is to use a model comprising three dimensions of management: the approach to the Iron Triangle, the process model, and the delivery mode. Different metrics may apply depending on where our organization stands on a […]

[…] Time is a useful metric for the purpose. If you’re using a time-box process model in the strict sense, you can use Velocity in a similar way. Such forecasts are more accurate than […]